4 minutes

Code from LLMs will be the default rather than the exception

(Why babysitting your AI pair-programmer will go the way of the elevator chauffeur)

The Review Reflex (AKA: Today)

Right now it can be sensible to eyeball every line your AI coding assistant spits out.

The models hallucinate, mis-import, and decide eval() is a good idea.

But fast-forward a few release cycles and that ritual will flip from “best practice” to time-wasting anti-pattern.

With well-crafted prompts plus the right project context, an LLM will out-code the average engineer safer, faster, and with fewer Friday-night pager alerts.

Reading every generated line will feel like manually checking the elevator cables before riding to the tenth floor.

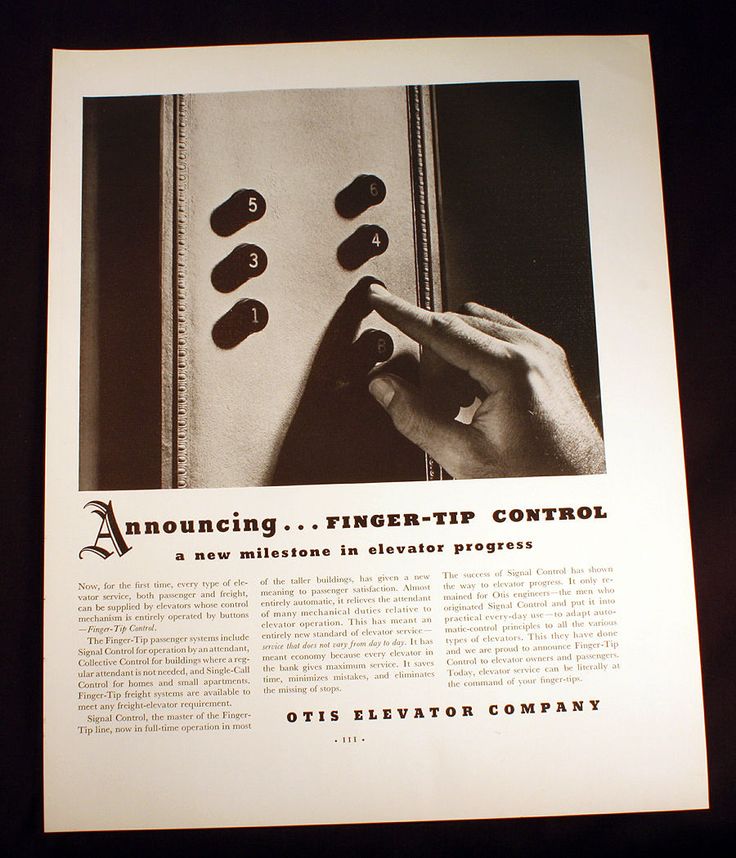

Remember when humans operated elevators?

- 1850 – Elisha Otis invents the safety brake and demos it by slicing his own hoist cable at the World’s Fair1. Crowd loses their mind.

- 1888-1895 – Push-button patents arrive, but nobody trusts an empty cab2.

- 1900-1930s – Uniformed operators move the lever, crack jokes, and give riders the warm fuzzies2.

- 1945 – 15,000 New York elevator operators strike 3; Wall Street loses $100M in a week. Office drones discover they can, in fact, press a button.

- Late 1940s – Auto-levelling, door interlocks, giant red STOP buttons, ad campaigns starring kids riding alone4. Panic melts.

- 1950 – Otis Autotronic: high-speed lifts, zero operators, zero riots56.

- 1970s-Now – Operators survive only in Art-Deco hotels and Wes-Anderson films. Everyone else smashes a button and checks Slack.

During summer breaks in college (mid-2000s) I operated a 1917 Otis elevator in an old hotel. I had to time the floor alignments just right so the elderly hotel guests wouldn’t face plant getting in and out of the elevator.

It was a novelty for old people.

It was annoying to anyone under 50 to need the service of a hungover 19 year old to get them to the 3rd floor.

Today’s developers are elevator operators

The impulse to babysit every LLM-generated pull request mirrors the bow-tied attendant who once eased elevator levers for nervous passengers. It feels responsible, until you realize the machinery has long since outgrown the need.

Each model version slashes hallucination rates while our prompt craft, test harnesses, and policy scanners bulk up the guardrails. Feed the agent rich domain context plus explicit acceptance criteria and the results are safer than the one your sleep-starved teammate would have typed at 2 a.m.

Spending hours scrolling through a robot-written file tree will soon be as absurd as prying open the doors to inspect the cables before every ride. The high-leverage skill shifts upstream: design the prompt, wire the automated checks, then trust the system you just built.

Developers who cling to line-by-line audits will join uniformed lift operators in the history museum. Those who master the tooling will ship faster, sleep more, and invest their brains in problems no LLM can yet solve.

What if it produces bad code?

Mash the buttons for 2-9 on your way to the 10th floor and you’ll stop eight extra times.

Same with LLMs:

# Prompt

"Build me a full-stack e-commerce site.

I refuse to describe any more details. K thx bye."

Result: a Franken-app filled with security holes. That’s your fault, not the models.

New Skills, Same Building

Yesterday we memorized syntax. Tomorrow we orchestrate fleets of synthetic co-workers.

Think “chief architect,” not “human compiler.” The LLM writes the rafters and drywall; you decide whether the house has three floors, an escape route, and meets code.

What rises in value:

- Product decomposition – Translating squishy business goals into explicit modules, contracts, and acceptance tests the model can target.

- Prompt architecture – Crafting layered prompts, examples, and context windows so the AI builds your design, not its nearest-neighbor guess.

- Guardrail engineering – Policy filters, sandboxing, and automated audits that trap security or compliance violations before they ship.

- Observability & feedback loops – Instrumentation that lets both humans and models learn from prod in near real time.

- Ethical & economic governance – Balancing velocity with responsibility, cost ceilings, and carbon budgets.

Notice what’s missing: hand-wrangling null checks, re-aligning braces, arguing tabs vs. spaces. The robot’s got that. Your job is to point it at the right skyline and keep the blueprints updated.

Monday Checklist

- Replace “code review” with prompt review.

- Teach juniors system design, not for-loop trivia.

- Automate checks until you trust them more than Steve from QA.

- When the bot borks, fix the prompt/rules/context. Trim the fat, not the muscle

Going Up

A kid in 1950 could press the 10th floor button and arrive alive.

A thoughtful engineer with a clear goal articulated can get production ready code out of an AI coding assistant today.

Stop waiting for the operator. Take control of what your AI is building for you. Press the button. Ship.

-

Wired, “March 23 1857: Mr. Otis Gives You a Lift” March 2010. ↩︎

-

Lee Gray, “The History of Operatorless Elevators: Push-Button Control 1886-1895” Elevator World, April 2023. ↩︎ ↩︎

-

Henry Greenidge, “How a Historic Strike Paved the Way for the Automated Elevator” Medium, May 2020. ↩︎

-

NPR Planet Money, “Remembering When Driverless Elevators Drew Skepticism” July 31 2015. ↩︎

-

Otis Elevator Company, “Company History Timeline” ↩︎

-

“Otis Autotronic” Elevator Wiki. ↩︎